香橙派-RKNN-Yolov5

香橙派-RKNN-Yolov5

环境说明

RKNN版本:1.4.0

香橙派系统环境:Ubuntu20

香橙派python版本:3.7

电脑系统环境:Ubuntu20

电脑python版本:3.8

硬件设备:香橙派5

所需源码

1 | https://github.com/rockchip-linux/rknn-toolkit2.git |

1 | https://github.com/rockchip-linux/rknpu2.git |

1 | https://github.com/ultralytics/yolov5.git |

RKNN 香橙派 部署

代码下载

1

2git clone https://github.com/rockchip-linux/rknn-toolkit2.git

git checkout v1.6.0查看RKNN 1.6.0版本支持的python版本

1

2

3

4

5

6

7

8ls rknn_toolkit_lite2/packages

# 结果

rknn_toolkit_lite2-1.6.0-cp310-cp310-linux_aarch64.whl

rknn_toolkit_lite2-1.6.0-cp37-cp37m-linux_aarch64.whl

rknn_toolkit_lite2-1.6.0-cp39-cp39-linux_aarch64.whl

rknn_toolkit_lite2-1.6.0-cp311-cp311-linux_aarch64.whl

rknn_toolkit_lite2-1.6.0-cp38-cp38-linux_aarch64.whl

rknn_toolkit_lite2_1.6.0_packages.md5sum我们可以使用python 3.7 到 3.11之间的任何版本。

安装python 3.7

1

2

3

4conda create -n py37 python=3.7

conda activate py37

pip install rknn_toolkit_lite2/packages/rknn_toolkit_lite2-1.6.0-cp37-cp37m-linux_aarch64.whl

pip install opencv-python运行测试程序

1

2cd rknn_toolkit_lite2/examples/dynamic_shape

python test.py运行结果

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16--> Load RKNN model

done

--> Init runtime environment

I RKNN: [11:16:18.618] RKNN Runtime Information: librknnrt version: 1.4.0 (a10f100eb@2022-09-09T09:07:14)

I RKNN: [11:16:18.618] RKNN Driver Information: version: 0.8.2

E RKNN: [11:16:18.618] 6, 1

E RKNN: [11:16:18.618] Invalid RKNN model version 6

E RKNN: [11:16:18.618] rknn_init, load model failed!

E Catch exception when init runtime!

E Traceback (most recent call last):

File "/opt/conda/envs/py37/lib/python3.7/site-packages/rknnlite/api/rknn_lite.py", line 148, in init_runtime

self.rknn_runtime.build_graph(self.rknn_data, self.load_model_in_npu)

File "rknnlite/api/rknn_runtime.py", line 919, in rknnlite.api.rknn_runtime.RKNNRuntime.build_graph

Exception: RKNN init failed. error code: RKNN_ERR_FAIL

Init runtime environment failed从运行的结果中

librknnrt version: 1.4.0,可以看出。我们的设备支持RKNN的1.4.0的版本

接下来,我们就安装RKNN1.4.0的版本。

RKNN 1.4.0版本安装

- 切换 RKNN 1.4.0版本

1

git checkout v1.4.0

- 支持的Python版本查看

1

2

3

4

5ls rknn_toolkit_lite2/packages

@结果 支持python 3.7与3.9

rknn_toolkit_lite2-1.4.0-cp37-cp37m-linux_aarch64.whl

rknn_toolkit_lite2-1.4.0-cp39-cp39-linux_aarch64.whl

rknn_toolkit_lite2_1.4.0_packages.md5sum - 安装Python3.7环境

1

2conda create -n py37 python=3.7

conda activate py37 - 安装rknn-toolkit_lite2

1

2pip install rknn_toolkit_lite2/packages/rknn_toolkit_lite2-1.4.0-cp37-cp37m-linux_aarch64.whl

pip install opencv-python - 测试安装效果

1

2# 没有出现报错,则安装成功

python -c "from rknnlite.api import RKNNLite"

RKNN PC Linux部署

由于香橙派上只能用1.4.0的版本,电脑上也用1.4.0的版本

- 下载指定tag的代码

1

2git clone https://github.com/rockchip-linux/rknn-toolkit2.git

git checkout v1.4.0 - 查看RKNN 1.6.0版本支持的python版本我们可以使用python 3.6 和 3.8的版本。

1

2

3

4

5ls packages/

# 结果

md5sum.txt

rknn_toolkit2-1.4.0_22dcfef4-cp36-cp36m-linux_x86_64.whl

rknn_toolkit2-1.4.0_22dcfef4-cp38-cp38-linux_x86_64.whl - 创建Python3.8的环境

1

2conda create -n py38 python=3.8

conda activate py38 - 安装rknn_toolkit2

1

pip install packages/rknn_toolkit2-1.4.0_22dcfef4-cp38-cp38-linux_x86_64.whl

- 运行yolov5例程目录下的

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23cd examples/onnx/yolov5

python test.py

# 结果

--> Export rknn model

done

--> Init runtime environment

W init_runtime: Target is None, use simulator!

done

--> Running model

Analysing : 100%|███████████████████████████████████████████████| 146/146 [00:00<00:00, 5137.71it/s]

Preparing : 100%|███████████████████████████████████████████████| 146/146 [00:00<00:00, 1165.36it/s]

W inference: The dims of input(ndarray) shape (640, 640, 3) is wrong, expect dims is 4! Try expand dims to (1, 640, 640, 3)!

done

class: person, score: 0.8223356008529663

box coordinate left,top,right,down: [473.26745200157166, 231.93780636787415, 562.1268351078033, 519.7597033977509]

class: person, score: 0.817978024482727

box coordinate left,top,right,down: [211.9896697998047, 245.0290389060974, 283.70787048339844, 513.9374527931213]

class: person, score: 0.7971192598342896

box coordinate left,top,right,down: [115.24964022636414, 232.44154334068298, 207.7837154865265, 546.1097872257233]

class: person, score: 0.4627230763435364

box coordinate left,top,right,down: [79.09242534637451, 339.18042743206024, 121.60038471221924, 514.234916806221]

class: bus , score: 0.7545359134674072

box coordinate left,top,right,down: [86.41703361272812, 134.41848754882812, 558.1083570122719, 460.4184875488281]yolov5s.rknn就是最后生成的模型。 - 适配3588模型

%%%%1

2

3

4# 239行

rknn = RKNN(verbose=True)

替换为

rknn = RKNN()1

2

3

4# 243行

rknn.config(mean_values=[128, 128, 128], std_values=[128, 128, 128])

替换为

rknn.config(mean_values=[128, 128, 128], std_values=[128, 128, 128], target_platform='rk3588')1

2

3

4# 272行

ret = rknn.init_runtime()

替换为

ret = rknn.init_runtime(target='rk3588') - 运行test.py,生成yolov5s.rknn

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18python test.py

# 结果

done

--> Export rknn model

done

--> Init runtime environment

I target set by user is: rk3588

I Starting ntp or adb, target is RK3588

I Start adb...

error: no devices/emulators found

E init_runtime: Connect to Device Failure (-1), Please make sure the USB connection is normal!

E init_runtime: Catch exception when init runtime!

E init_runtime: Traceback (most recent call last):

E init_runtime: File "rknn/api/rknn_base.py", line 1975, in rknn.api.rknn_base.RKNNBase.init_runtime

E init_runtime: File "rknn/api/rknn_runtime.py", line 194, in rknn.api.rknn_runtime.RKNNRuntime.__init__

E init_runtime: File "rknn/api/rknn_platform.py", line 331, in rknn.api.rknn_platform.start_ntp_or_adb

E init_runtime: Exception: Init runtime environment failed!

Init runtime environment failed! - 将yolov5s.rknn传到香橙派上

香橙派 运行yolov5

- 建立文件

deploy.py,将下面的内容写入文件中。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282import numpy as np

import cv2

from rknnlite.api import RKNNLite

RKNN_MODEL = 'yolov5s.rknn'

IMG_PATH = "bus.jpg"

QUANTIZE_ON = True

OBJ_THRESH = 0.25

NMS_THRESH = 0.45

IMG_SIZE = 640

CLASSES = ("person", "bicycle", "car", "motorbike ", "aeroplane ", "bus ", "train", "truck ", "boat", "traffic light",

"fire hydrant", "stop sign ", "parking meter", "bench", "bird", "cat", "dog ", "horse ", "sheep", "cow", "elephant",

"bear", "zebra ", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite",

"baseball bat", "baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup", "fork", "knife ",

"spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza ", "donut", "cake", "chair", "sofa",

"pottedplant", "bed", "diningtable", "toilet ", "tvmonitor", "laptop ", "mouse ", "remote ", "keyboard ", "cell phone", "microwave ",

"oven ", "toaster", "sink", "refrigerator ", "book", "clock", "vase", "scissors ", "teddy bear ", "hair drier", "toothbrush ")

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def xywh2xyxy(x):

# Convert [x, y, w, h] to [x1, y1, x2, y2]

y = np.copy(x)

y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left x

y[:, 1] = x[:, 1] - x[:, 3] / 2 # top left y

y[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right x

y[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right y

return y

def process(input, mask, anchors):

anchors = [anchors[i] for i in mask]

grid_h, grid_w = map(int, input.shape[0:2])

box_confidence = sigmoid(input[..., 4])

box_confidence = np.expand_dims(box_confidence, axis=-1)

box_class_probs = sigmoid(input[..., 5:])

box_xy = sigmoid(input[..., :2])*2 - 0.5

col = np.tile(np.arange(0, grid_w), grid_w).reshape(-1, grid_w)

row = np.tile(np.arange(0, grid_h).reshape(-1, 1), grid_h)

col = col.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)

row = row.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)

grid = np.concatenate((col, row), axis=-1)

box_xy += grid

box_xy *= int(IMG_SIZE/grid_h)

box_wh = pow(sigmoid(input[..., 2:4])*2, 2)

box_wh = box_wh * anchors

box = np.concatenate((box_xy, box_wh), axis=-1)

return box, box_confidence, box_class_probs

def filter_boxes(boxes, box_confidences, box_class_probs):

"""Filter boxes with box threshold. It's a bit different with origin yolov5 post process!

# Arguments

boxes: ndarray, boxes of objects.

box_confidences: ndarray, confidences of objects.

box_class_probs: ndarray, class_probs of objects.

# Returns

boxes: ndarray, filtered boxes.

classes: ndarray, classes for boxes.

scores: ndarray, scores for boxes.

"""

boxes = boxes.reshape(-1, 4)

box_confidences = box_confidences.reshape(-1)

box_class_probs = box_class_probs.reshape(-1, box_class_probs.shape[-1])

_box_pos = np.where(box_confidences >= OBJ_THRESH)

boxes = boxes[_box_pos]

box_confidences = box_confidences[_box_pos]

box_class_probs = box_class_probs[_box_pos]

class_max_score = np.max(box_class_probs, axis=-1)

classes = np.argmax(box_class_probs, axis=-1)

_class_pos = np.where(class_max_score >= OBJ_THRESH)

boxes = boxes[_class_pos]

classes = classes[_class_pos]

scores = (class_max_score* box_confidences)[_class_pos]

return boxes, classes, scores

def nms_boxes(boxes, scores):

"""Suppress non-maximal boxes.

# Arguments

boxes: ndarray, boxes of objects.

scores: ndarray, scores of objects.

# Returns

keep: ndarray, index of effective boxes.

"""

x = boxes[:, 0]

y = boxes[:, 1]

w = boxes[:, 2] - boxes[:, 0]

h = boxes[:, 3] - boxes[:, 1]

areas = w * h

order = scores.argsort()[::-1]

keep = []

while order.size > 0:

i = order[0]

keep.append(i)

xx1 = np.maximum(x[i], x[order[1:]])

yy1 = np.maximum(y[i], y[order[1:]])

xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]])

yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]])

w1 = np.maximum(0.0, xx2 - xx1 + 0.00001)

h1 = np.maximum(0.0, yy2 - yy1 + 0.00001)

inter = w1 * h1

ovr = inter / (areas[i] + areas[order[1:]] - inter)

inds = np.where(ovr <= NMS_THRESH)[0]

order = order[inds + 1]

keep = np.array(keep)

return keep

def yolov5_post_process(input_data):

masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]

anchors = [[10, 13], [16, 30], [33, 23], [30, 61], [62, 45],

[59, 119], [116, 90], [156, 198], [373, 326]]

boxes, classes, scores = [], [], []

for input, mask in zip(input_data, masks):

b, c, s = process(input, mask, anchors)

b, c, s = filter_boxes(b, c, s)

boxes.append(b)

classes.append(c)

scores.append(s)

boxes = np.concatenate(boxes)

boxes = xywh2xyxy(boxes)

classes = np.concatenate(classes)

scores = np.concatenate(scores)

nboxes, nclasses, nscores = [], [], []

for c in set(classes):

inds = np.where(classes == c)

b = boxes[inds]

c = classes[inds]

s = scores[inds]

keep = nms_boxes(b, s)

nboxes.append(b[keep])

nclasses.append(c[keep])

nscores.append(s[keep])

if not nclasses and not nscores:

return None, None, None

boxes = np.concatenate(nboxes)

classes = np.concatenate(nclasses)

scores = np.concatenate(nscores)

return boxes, classes, scores

def draw(image, boxes, scores, classes):

"""Draw the boxes on the image.

# Argument:

image: original image.

boxes: ndarray, boxes of objects.

classes: ndarray, classes of objects.

scores: ndarray, scores of objects.

all_classes: all classes name.

"""

for box, score, cl in zip(boxes, scores, classes):

top, left, right, bottom = box

print('class: {}, score: {}'.format(CLASSES[cl], score))

print('box coordinate left,top,right,down: [{}, {}, {}, {}]'.format(top, left, right, bottom))

top = int(top)

left = int(left)

right = int(right)

bottom = int(bottom)

cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 2)

cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score),

(top, left - 6),

cv2.FONT_HERSHEY_SIMPLEX,

0.6, (0, 0, 255), 2)

def letterbox(im, new_shape=(640, 640), color=(0, 0, 0)):

# Resize and pad image while meeting stride-multiple constraints

shape = im.shape[:2] # current shape [height, width]

if isinstance(new_shape, int):

new_shape = (new_shape, new_shape)

# Scale ratio (new / old)

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

# Compute padding

ratio = r, r # width, height ratios

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh padding

dw /= 2 # divide padding into 2 sides

dh /= 2

if shape[::-1] != new_unpad: # resize

im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)

top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border

return im, ratio, (dw, dh)

if __name__ == '__main__':

# Create RKNN object

rknn = RKNNLite()

# load RKNN model

print('--> Load RKNN model')

ret = rknn.load_rknn(RKNN_MODEL)

# Init runtime environment

print('--> Init runtime environment')

ret = rknn.init_runtime(core_mask=RKNNLite.NPU_CORE_0_1_2) #使用0 1 2三个NPU核心

# ret = rknn.init_runtime('rk3566')

if ret != 0:

print('Init runtime environment failed!')

exit(ret)

print('done')

# Set inputs

img = cv2.imread(IMG_PATH)

# img, ratio, (dw, dh) = letterbox(img, new_shape=(IMG_SIZE, IMG_SIZE))

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = cv2.resize(img, (IMG_SIZE, IMG_SIZE))

# Inference

outputs = rknn.inference(inputs=[img])

# post process

input0_data = outputs[0]

input1_data = outputs[1]

input2_data = outputs[2]

input0_data = input0_data.reshape([3, -1]+list(input0_data.shape[-2:]))

input1_data = input1_data.reshape([3, -1]+list(input1_data.shape[-2:]))

input2_data = input2_data.reshape([3, -1]+list(input2_data.shape[-2:]))

input_data = list()

input_data.append(np.transpose(input0_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input1_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input2_data, (2, 3, 0, 1)))

boxes, classes, scores = yolov5_post_process(input_data)

img_1 = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

if boxes is not None:

draw(img_1, boxes, scores, classes)

# show output

cv2.imshow("post process result", img_1)

cv2.waitKey(0)

cv2.destroyAllWindows()

rknn.release() - 运行程序

1

python deploy.py

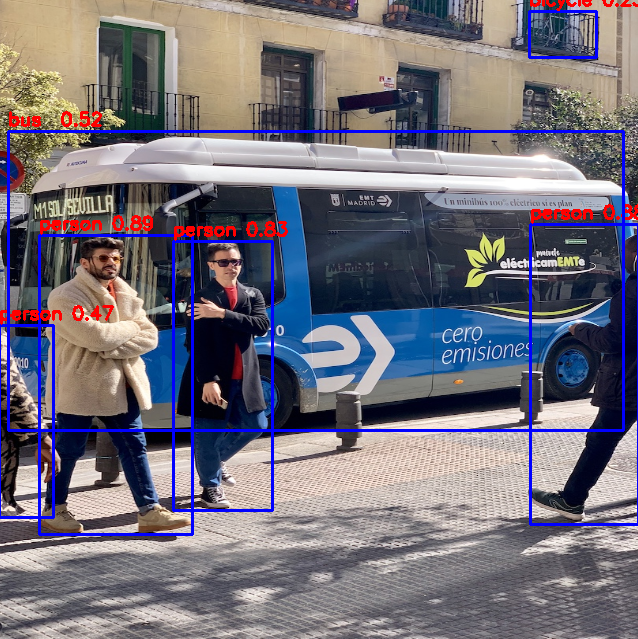

- 结果展示

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18--> Load RKNN model

--> Init runtime environment

I RKNN: [13:00:34.125] RKNN Runtime Information: librknnrt version: 1.4.0 (a10f100eb@2022-09-09T09:07:14)

I RKNN: [13:00:34.125] RKNN Driver Information: version: 0.8.2

I RKNN: [13:00:34.125] RKNN Model Information: version: 1, toolkit version: 1.4.0-22dcfef4(compiler version: 1.4.0 (3b4520e4f@2022-09-05T20:52:35)), target: RKNPU v2, target platform: rk3588, framework name: ONNX, framework layout: NCHW

done

class: person, score: 0.8928694725036621

box coordinate left,top,right,down: [40.627504616975784, 235.50569766759872, 193.95716640353203, 534.8807769417763]

class: person, score: 0.878767728805542

box coordinate left,top,right,down: [531.5798395127058, 224.8939728140831, 639.2570745497942, 524.2690520882607]

class: person, score: 0.8309085965156555

box coordinate left,top,right,down: [174.62115895748138, 241.39454793930054, 273.0503498315811, 510.30296182632446]

class: person, score: 0.4691559970378876

box coordinate left,top,right,down: [0.81878662109375, 325.4185321331024, 54.147705078125, 517.9757549762726]

class: bicycle, score: 0.24984873831272125

box coordinate left,top,right,down: [530.9477665424347, 11.197928547859192, 597.0226924419403, 57.32749259471893]

class: bus , score: 0.5245066285133362

box coordinate left,top,right,down: [9.820066332817078, 131.40282291173935, 624.8392354249954, 430.7779021859169]

帮助文档

源码

https://github.com/rockchip-linux/rknpu2

https://github.com/rockchip-linux/rknn-toolkit2

https://github.com/ultralytics/yolov5ROC-RK3588-PC

https://wiki.t-firefly.com/zh_CN/ROC-RK3588-PC/usage_npu.html【边缘设备】yolov5训练与rknn模型导出并在RK3588部署~1.环境准备(亲测有效)

https://blog.csdn.net/zhoujinwang/article/details/132320665香橙派5 RK3588 yolov5模型转换rknn及部署踩坑全记录 orangepi 5

https://blog.csdn.net/m0_55217834/article/details/130583886

|

|

签名:Smile every day

名字:宏沉一笑

邮箱:whghcyx@outlook.com

个人网站:https://whg555.github.io

转载请注明来源,欢迎对文章中的引用来源进行考证,欢迎指出任何有错误或不够清晰的表达。可以在下面评论区评论,也可以邮件至 whghcyx@outlook.com

文章标题:香橙派-RKNN-Yolov5

文章字数:3k

本文作者:宏沉一笑

发布时间:2024-03-28, 10:36:30

最后更新:2024-03-30, 11:58:32

原始链接:https://whghcyx.gitee.io/2024/03/28/AI-2024-03-28-%E9%A6%99%E6%A9%99%E6%B4%BE-RKNN-Yolov5/版权声明: "署名-非商用-相同方式共享 4.0" 转载请保留原文链接及作者。